Overview of Qwen2-72B-Instruct

Qwen2-72B-Instruct‚ developed by Alibaba‚ is a powerful instruction-tuned model within the Qwen2 series‚ offering multilingual support for 29 languages and extensive capabilities in natural language understanding and generation.

1.1 Developer and Model Series

Qwen2-72B-Instruct is developed by Alibaba as part of the Qwen2 model series‚ which includes variants with 0.5B‚ 1.5B‚ 7B‚ and 72B parameters. This model is specifically optimized for instruction-tuned tasks‚ making it highly effective for interactive and guided applications. The Qwen2 series is designed to handle advanced language tasks‚ with the 72B version being the most robust for complex operations like natural language understanding and generation.

1.2 Parameter Size and Capabilities

The Qwen2-72B-Instruct model features 72 billion parameters‚ making it one of the largest in the Qwen2 series. This substantial parameter size enables advanced capabilities‚ including deep natural language understanding‚ efficient text generation‚ and superior performance in complex tasks. The model excels in multilingual support‚ coding‚ mathematics‚ and reasoning‚ positioning it as a versatile tool for diverse applications. Its scalability ensures it can handle extensive inputs‚ making it suitable for both research and practical use cases.

1.3 Language Support and Multilingual Tasks

Qwen2-72B-Instruct supports 29 languages‚ including English and Chinese‚ enabling robust multilingual capabilities. Training on diverse linguistic data allows the model to excel in cross-lingual tasks‚ such as translation‚ language understanding‚ and generation. Its architecture is optimized for processing multilingual inputs‚ ensuring consistent performance across different languages. This makes it a valuable resource for global applications‚ fostering seamless communication and collaboration across linguistic boundaries.

1.4 Model Series and Variants

The Qwen2 series includes models of various sizes: 0.5B‚ 1.5B‚ 7B‚ and 72B parameters. Qwen2-72B-Instruct is a specialized variant optimized for instruction-tuned tasks‚ enhancing its ability to follow directives and generate accurate responses. This variant leverages the scalability of the Qwen2 architecture to deliver advanced performance in complex language tasks‚ making it a versatile tool for both general and specialized applications. Its modular design allows seamless integration across different use cases‚ ensuring efficiency and adaptability.

Technical Specifications

Qwen2-72B-Instruct offers a context length of 131‚072 tokens and processes up to 128K tokens‚ enabling extensive input handling. It supports multilingual tasks across 29 languages‚ ensuring versatile applications.

2.1 Context Length and Token Capacity

Qwen2-72B-Instruct supports an impressive context length of up to 131‚072 tokens‚ allowing it to process and analyze extensive texts with remarkable efficiency. The model’s token capacity is optimized for handling long-form inputs‚ making it suitable for tasks requiring detailed context understanding. This feature enhances its ability to manage complex queries and maintain coherence across lengthy interactions. The token capacity is a significant advancement‚ enabling the model to perform effectively in scenarios where deep contextual understanding is crucial.

2.2 Input and Output Limits

Qwen2-72B-Instruct has defined limits for input and output processing. The model accepts input up to 1.2 million tokens and generates output within the same range. These limits ensure efficient resource utilization while maintaining high performance. Despite these constraints‚ the model excels in handling large-scale tasks‚ offering a balance between capacity and computational efficiency. This makes it suitable for complex applications requiring substantial input and output processing capabilities.

2.3 Token Processing and Scaling

Qwen2-72B-Instruct can process up to 131‚072 tokens in context‚ enabling extensive input handling. It utilizes YaRN-based scaling to extend beyond its native 32K token capacity‚ ensuring efficient processing of large texts. This scalability allows the model to manage complex tasks without performance degradation‚ making it ideal for applications requiring detailed analysis and multi-step reasoning.

Core Capabilities

Qwen2-72B-Instruct excels in natural language understanding‚ multilingual tasks across 29 languages‚ and advanced capabilities in coding‚ mathematics‚ and reasoning‚ making it versatile for diverse applications.

3.1 Natural Language Understanding (NLU)

Qwen2-72B-Instruct demonstrates exceptional Natural Language Understanding (NLU)‚ enabling it to comprehend complex texts accurately. Its advanced architecture allows it to process and interpret context effectively‚ ensuring precise responses. The model excels in identifying intent‚ entities‚ and relationships within text‚ making it highly effective for tasks like information extraction‚ summarization‚ and question answering. These capabilities are further enhanced by its training on diverse datasets‚ ensuring robust performance across various languages and domains.

3.2 Text Generation and Response Quality

Qwen2-72B-Instruct excels in text generation and response quality‚ producing coherent and contextually relevant outputs. Its instruction-tuned architecture ensures responses are accurate and aligned with user intent. The model generates clear‚ well-structured text for diverse tasks‚ from creative writing to technical explanations. Trained on a large-scale dataset‚ it maintains high quality across languages and domains‚ making it ideal for multilingual and complex generation tasks. Its ability to process extensive contexts enhances its capacity to deliver detailed and contextually appropriate responses.

3.3 Coding and Mathematical Reasoning

Qwen2-72B-Instruct demonstrates exceptional coding and mathematical reasoning capabilities. It can write‚ debug‚ and optimize code across various programming languages‚ including complex algorithm design. The model also excels in mathematical problem-solving‚ handling algebra‚ calculus‚ and logic puzzles with precision. Trained on a diverse dataset‚ it supports multilingual coding tasks and provides step-by-step explanations for mathematical solutions. Its ability to process extensive contexts makes it highly effective for debugging and optimizing code in real-world scenarios‚ enhancing productivity for developers and researchers alike.

3.4 Multilingual Support and Cross-Lingual Tasks

Qwen2-72B-Instruct offers multilingual support across 29 languages‚ enabling seamless cross-lingual tasks. Its training data includes diverse linguistic resources‚ making it proficient in understanding and generating text in multiple languages. The model excels in translation‚ language conversion‚ and cross-lingual reasoning‚ allowing users to perform tasks like multilingual summarization and information extraction; This capability is particularly valuable for global applications‚ facilitating communication and collaboration across linguistic and cultural boundaries‚ while maintaining high accuracy and context understanding in each supported language.

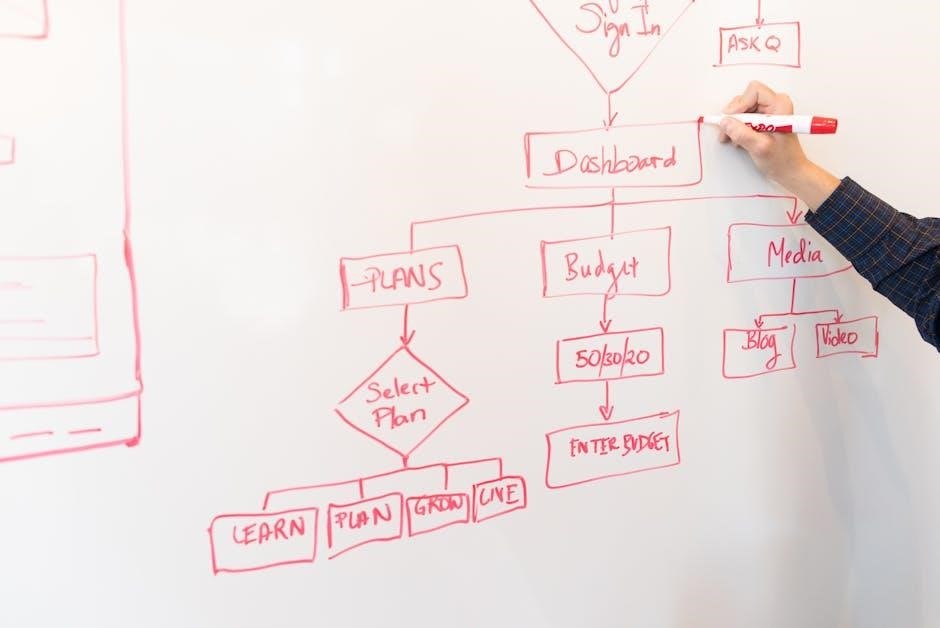

Applications and Use Cases

Qwen2-72B-Instruct is ideal for diverse applications‚ including education‚ business‚ content creation‚ and problem-solving. Its advanced capabilities make it suitable for tasks like language translation‚ code generation‚ and critical thinking.

4.1 Education and Research

Qwen2-72B-Instruct is a valuable tool in education and research‚ offering multilingual support for 29 languages. It aids students and researchers by providing detailed explanations‚ assisting with complex queries‚ and generating high-quality content. The model’s ability to process extensive contexts makes it ideal for analyzing lengthy academic texts and facilitating in-depth research. Its natural language understanding and generation capabilities enable it to simplify complex concepts‚ making it a versatile resource for educational institutions and research environments.

4.2 Business and Professional Tasks

Qwen2-72B-Instruct excels in supporting business and professional tasks with its advanced language capabilities. It automates tasks like report generation‚ email drafting‚ and data analysis‚ enhancing productivity. The model’s multilingual support aids global businesses in communication and collaboration. Its ability to handle complex queries and provide insightful responses makes it a valuable tool for decision-making and strategic planning. By streamlining workflows and improving efficiency‚ Qwen2-72B-Instruct empowers professionals to focus on high-priority tasks‚ driving innovation and growth in diverse industries.

4.3 Content Creation and Writing

Qwen2-72B-Instruct is a versatile tool for content creation and writing‚ offering high-quality text generation. It assists in crafting articles‚ blogs‚ and marketing materials with clarity and coherence. The model’s multilingual capabilities enable content creation in 29 languages‚ making it ideal for global audiences. Its understanding of context and tone ensures engaging and relevant output‚ while its ability to handle extensive inputs allows for long-form content creation. This makes Qwen2-72B-Instruct an essential asset for writers‚ marketers‚ and creators seeking to produce compelling and professional content efficiently.

4.4 Problem Solving and Critical Thinking

Qwen2-72B-Instruct demonstrates exceptional problem-solving and critical thinking abilities‚ leveraging its vast training data to tackle complex tasks. It excels in mathematical reasoning‚ logical analysis‚ and pattern recognition‚ making it a valuable tool for diverse applications. The model’s capacity to process extensive contexts enables it to provide insightful solutions‚ while its multilingual support extends its utility across global audiences. Whether in education‚ research‚ or industry‚ Qwen2-72B-Instruct aids in optimizing processes and delivering innovative outcomes‚ supported by its advanced language understanding and generation capabilities.

Training and Architecture

Qwen2-72B-Instruct was trained on a vast dataset of up to 18 trillion tokens‚ utilizing advanced transformer-based architecture to optimize performance and scalability across its 72 billion parameters.

5.1 Training Data and Dataset Size

Qwen2-72B-Instruct was trained on Alibaba’s extensive dataset comprising up to 18 trillion tokens‚ ensuring diverse and comprehensive language understanding. This large-scale dataset covers a wide range of texts‚ including web content‚ books‚ and specialized resources‚ enabling the model to excel in multilingual tasks and complex language processing.

5.2 Architecture and Model Design

Qwen2-72B-Instruct features a transformer-based architecture with 72 billion parameters‚ designed for efficient processing of complex tasks. Its decoder-focused design optimizes text generation and instruction following‚ leveraging multi-head attention and feed-forward networks. The model supports long-context processing up to 131‚072 tokens‚ enabling it to handle extensive inputs effectively. This architecture ensures scalability and robust performance across multilingual and coding tasks‚ making it a versatile tool for advanced language understanding and generation.

5.3 Fine-Tuning and Optimization Approaches

Qwen2-72B-Instruct underwent sophisticated fine-tuning‚ incorporating both supervised learning and direct preference optimization. These methods enhanced its ability to align responses with user instructions‚ improving relevance and quality. The model’s training data‚ sourced from diverse texts‚ ensures robust performance across languages and tasks. Additionally‚ parameter-efficient techniques were applied to optimize scalability‚ enabling seamless deployment across various applications without compromising performance. These approaches collectively position Qwen2-72B-Instruct as a leader in instruction-tuned models‚ capable of handling complex and nuanced tasks with precision.

Strengths and Limitations

Qwen2-72B-Instruct excels in handling complex tasks with its 72B parameters and multilingual support. However‚ its high computational demands and context limits may pose challenges.

6.1 Performance in Complex Tasks

Qwen2-72B-Instruct demonstrates exceptional performance in complex tasks‚ leveraging its 72 billion parameters to handle extensive context lengths of up to 131‚072 tokens. Its advanced language understanding enables seamless execution of coding‚ mathematical reasoning‚ and multilingual tasks. The model excels in information extraction and cross-lingual operations‚ making it a robust tool for diverse applications. Its capabilities are further enhanced by sophisticated training methods‚ ensuring high accuracy and reliability in demanding scenarios.

6.2 Limitations and Potential Drawbacks

Despite its strengths‚ Qwen2-72B-Instruct has limitations. Its large parameter size of 72 billion requires significant computational resources and energy‚ limiting accessibility. The model’s high memory demands and need for specialized hardware can hinder deployment in resource-constrained environments. Additionally‚ while it excels in many tasks‚ it may not perform optimally in highly specialized or niche applications without additional fine-tuning.

Future Directions and Updates

Future updates for Qwen2-72B-Instruct may include expanded language support‚ enhanced reasoning capabilities‚ and optimizations for efficiency‚ ensuring better accessibility and performance across diverse applications.

7.1 Upcoming Features and Improvements

Future updates for Qwen2-72B-Instruct may focus on enhancing multilingual capabilities‚ improving reasoning and problem-solving skills‚ and optimizing efficiency. The model could also see expanded support for more languages‚ advanced fine-tuning techniques‚ and better integration with developer tools. Additionally‚ improvements in context handling and token processing are expected‚ enabling more complex tasks. Alibaba may also prioritize ethical AI advancements‚ ensuring the model aligns with responsible AI practices while maintaining its leadership in instruction-tuned tasks.

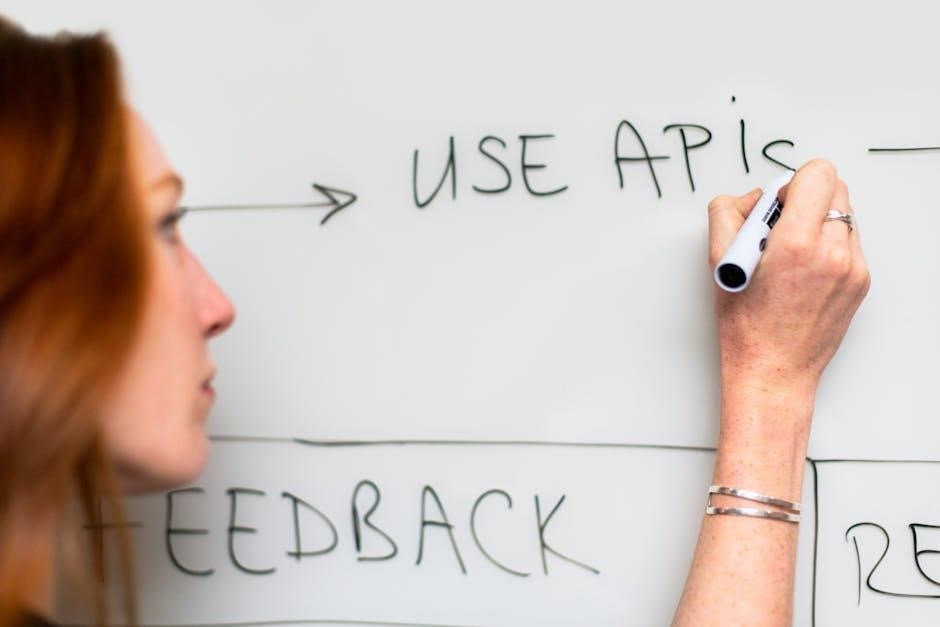

7.2 Community and Developer Engagement

Qwen2-72B-Instruct fosters strong community and developer engagement through open-source contributions and collaborative platforms. Developers can access tools and resources for fine-tuning and deploying the model. Workshops and hackathons encourage innovation‚ while feedback loops help refine future updates. The model’s multilingual support and extensive capabilities make it a favorite among researchers and educators‚ promoting widespread adoption and continuous improvement through community-driven initiatives.

Qwen2-72B-Instruct stands out as a robust‚ versatile model excelling in multilingual tasks‚ coding‚ and reasoning‚ making it a valuable tool for diverse applications and future advancements.

8.1 Final Thoughts on Qwen2-72B-Instruct

Qwen2-72B-Instruct represents a significant advancement in AI technology‚ offering exceptional capabilities in multilingual support‚ coding‚ and reasoning. Its ability to process up to 131‚072 tokens makes it highly versatile for complex tasks. With support for 29 languages‚ it caters to a global audience‚ enabling diverse applications in education‚ research‚ and professional environments. Its strong performance in natural language understanding and generation positions it as a valuable tool for both practical and innovative use cases‚ solidifying its role as a leader in the AI model landscape.